Introduction

In the realm of machine learning, the development of accurate predictive models is a crucial aspect. However, the mere creation of a model is not sufficient; it must be validated to ensure its effectiveness in real-world scenarios. Model validation is the process of assessing a model’s performance and generalizability. In this article, we will delve into various model validation techniques in machine learning, shedding light on the significance of validating models and the methods employed for this purpose.

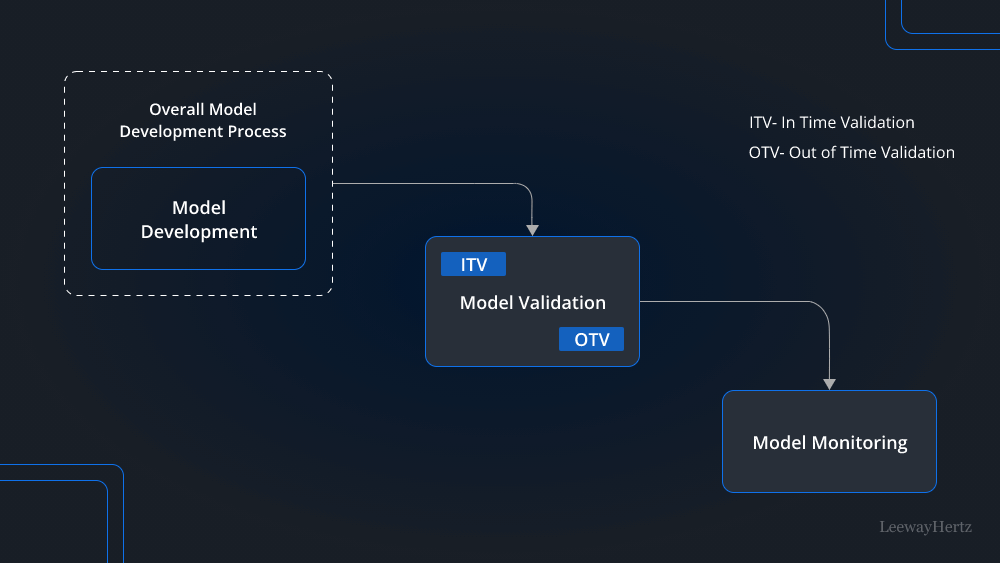

Understanding Model Validation

Model validation is imperative because it gauges how well a trained model performs on unseen data. A model that excels on the training data but fails to generalize to new, unseen data is of little practical use. Therefore, the primary goal of model validation is to estimate the model’s performance on data it has never encountered during training.

Cross-Validation

One widely-used model validation technique is cross-validation. Cross-validation involves partitioning the dataset into multiple subsets, training the model on a subset, and validating it on a different subset. This process is repeated several times, and the results are averaged to obtain a more robust performance estimate. Common types of cross-validation include k-fold cross-validation, stratified cross-validation, and leave-one-out cross-validation.

K-Fold Cross-Validation:

In k-fold cross-validation, the dataset is divided into ‘k’ subsets, and the model is trained ‘k’ times, each time using a different subset as the validation set and the remaining data as the training set. The performance metrics from each iteration are then averaged to assess overall model performance.

Stratified Cross-Validation:

Stratified cross-validation ensures that each subset maintains the same distribution of target classes as the original dataset. This is particularly useful when dealing with imbalanced datasets, where certain classes are underrepresented.

Leave-One-Out Cross-Validation:

Leave-one-out cross-validation is a special case of k-fold cross-validation where ‘k’ is equal to the number of samples in the dataset. This approach provides a comprehensive assessment but can be computationally expensive for large datasets.

Holdout Validation

Holdout validation is a simpler yet effective technique. It involves splitting the dataset into two parts – a training set and a validation set. The model is trained on the training set and evaluated on the validation set. While easy to implement, holdout validation may produce biased results if the dataset is not representative.

Validation Curves

Validation curves are graphical representations of a model’s performance as a function of a hyperparameter. By plotting the model’s performance against different values of a hyperparameter, practitioners can identify the optimal setting that maximizes performance. This technique is particularly useful when tuning hyperparameters to achieve the best model fit.

Conclusion

In the ever-evolving landscape of machine learning, the ability to create accurate and generalizable models is paramount. Model validation serves as a litmus test for a model’s efficacy in handling new, unseen data. Employing techniques such as cross-validation, holdout validation, and validation curves ensures that machine learning models not only perform well on training data but also have the potential to excel in real-world applications.

As machine learning continues to advance, mastering model validation techniques becomes increasingly crucial. The techniques discussed in this article provide a foundation for practitioners to evaluate and refine their models, ultimately contributing to the development of more robust and reliable machine learning systems.