Introduction

In the ever-evolving realm of artificial intelligence (AI), where models are crafted to mimic human intelligence, the importance of AI model security cannot be overstated. As organizations increasingly rely on AI models for decision-making and automation, safeguarding these models against potential threats becomes paramount. This article delves into the intricacies of AI model security, exploring the challenges and solutions that arise in the quest for secure AI models.

The Rise of AI in Modern Society

Before delving into the security aspects, it’s essential to acknowledge the pervasive role AI plays in contemporary society. From predictive analytics in finance to image recognition in healthcare, AI models have become indispensable. As their influence expands, so does the need to fortify these models against potential vulnerabilities and attacks.

Understanding AI Model Security

1. Threat Landscape Analysis

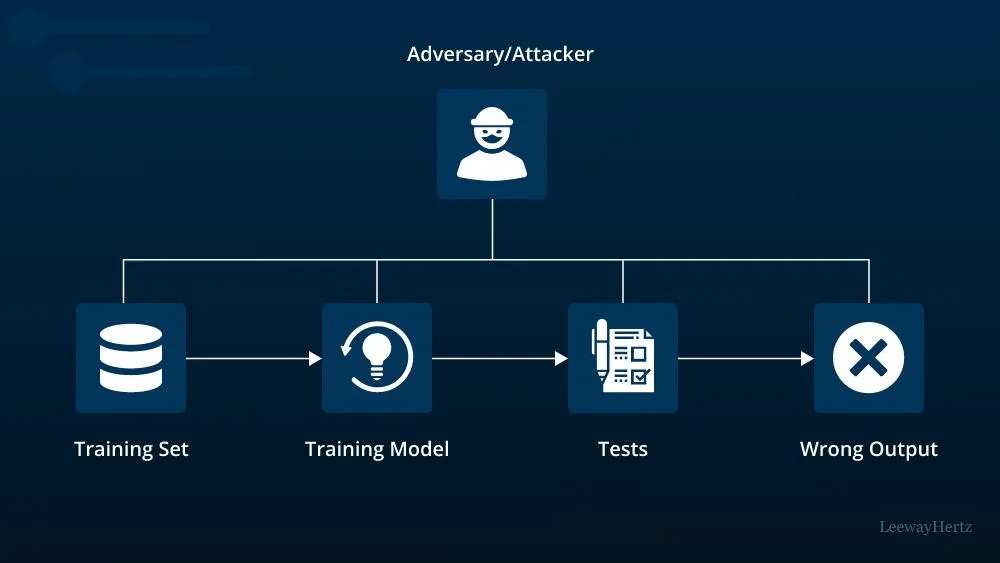

Before fortifying AI models, understanding the threat landscape is crucial. Threats can manifest in various forms, from adversarial attacks aiming to manipulate model outputs to data poisoning attempts that compromise the training data integrity. A comprehensive security strategy must consider potential threats at every stage of the AI model lifecycle.

2. Securing the Model Training Phase

The foundation of a robust AI model lies in its training phase. Ensuring the security of this phase involves safeguarding the training data from malicious manipulation and protecting the training infrastructure from unauthorized access. Employing encryption techniques and access controls can mitigate risks associated with the training phase.

3. Adversarial Attacks and Countermeasures

Adversarial attacks pose a significant threat to AI models. These attacks involve introducing subtle perturbations to input data, deceiving the model into making incorrect predictions. Implementing robust validation techniques, such as adversarial training and input sanitization, can enhance a model’s resilience against such attacks.

Challenges in Achieving AI Model Security

1. Interpretable Models vs. Security

While making AI models more interpretable is a desirable goal for transparency and accountability, it introduces challenges for security. Striking a balance between model interpretability and security measures is a delicate task. Implementing encryption methods that do not compromise interpretability is an ongoing challenge in the AI security landscape.

2. Data Privacy Concerns

AI models often rely on vast datasets to learn and generalize patterns. However, this reliance raises concerns about data privacy. Secure AI models must not only protect against external threats but also ensure that sensitive information within the training data remains confidential. Techniques like federated learning, which allows models to be trained across decentralized devices, are gaining prominence for addressing data privacy concerns.

Strategies for Building Secure AI Models

1. Regular Security Audits

Conducting regular security audits is essential to identify and address vulnerabilities in AI models. These audits should encompass both the model architecture and the surrounding infrastructure. Additionally, organizations should stay informed about emerging threats and update their security measures accordingly.

2. Implementing Robust Access Controls

Controlling access to AI model resources is a fundamental security measure. Only authorized personnel should have access to training data, model parameters, and the infrastructure. Role-based access controls can help enforce these restrictions, reducing the risk of unauthorized manipulation.

3. Continuous Monitoring and Incident Response

AI model security is an ongoing process that requires continuous monitoring. Implementing robust monitoring systems can detect anomalies and potential security breaches in real-time. A well-defined incident response plan ensures that any security issues are addressed promptly, minimizing the impact on the organization.

Future Directions in AI Model Security

1. Explainable AI and Security

As the field of explainable AI advances, integrating explainability into security measures becomes crucial. Understanding how a model arrives at a decision can aid in identifying and mitigating potential security risks. Future developments may see a convergence of explainability and security in AI models.

2. AI Model Federated Security

With the rise of decentralized technologies, federated security for AI models is gaining attention. This approach involves distributing security measures across the devices contributing to the model, reducing the risk of a single point of failure. As federated learning evolves, so too will the security measures associated with this approach.

Conclusion

As AI continues to reshape industries and redefine the way we interact with technology, securing AI models is of utmost importance. Organizations must recognize the dynamic nature of the threat landscape and proactively implement security measures across all stages of the AI model lifecycle. By addressing challenges, adopting robust security strategies, and staying abreast of emerging trends, the fortification of AI models becomes an achievable goal in the pursuit of responsible and secure AI integration.

To Learn More :- https://www.leewayhertz.com/ai-model-security/